Setup a dataflow to write messages from pub/sub topics to BiqQuery table in Google Cloud Platform (GCP)

Google Cloud Dataflow is data processing service that can be used for streaming and batch applications. Users can setup pipelines in Dataflow to integrate and process large datasets.

With pub/sub, users can setup dataflow pipelines to write messages from a pub/sub topic or subscription to a BigQuery table.

IoT Cloud Tester application provides an easy interface to setup a dataflow to write messages from pub/sub topic to a BigQuery table in Google Cloud Platform.

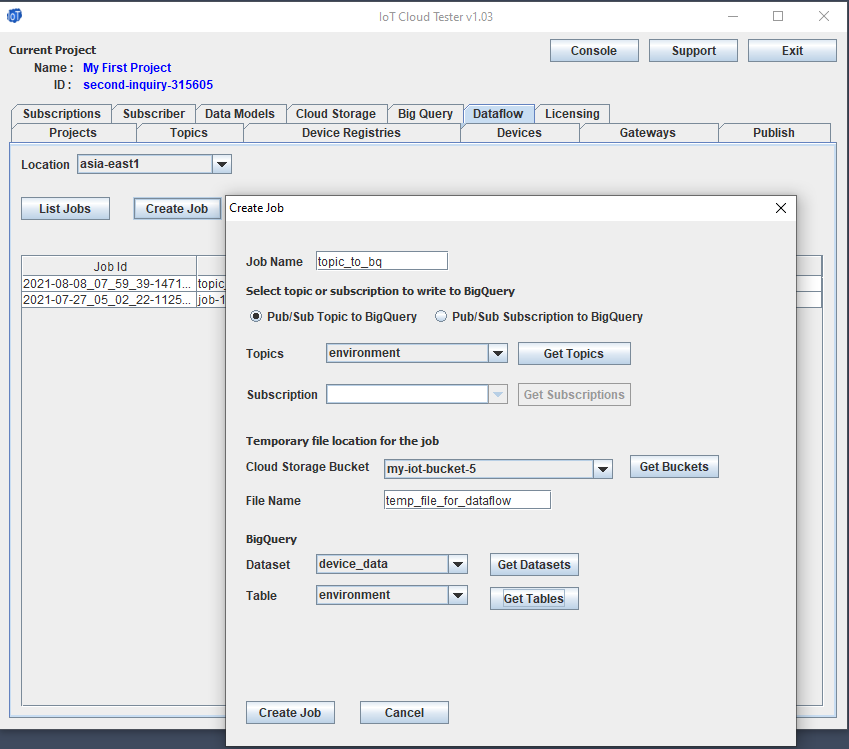

To setup a dataflow to write messages from pub/sub topic to BigQuery table,

- In the 'Dataflow' tab, click on 'Create Job' tab.

- Enter the job name

- Select 'Pub/Sub Topic to BigQuery' option

- Get the list of topics and select one

- Get the available cloud storage buckets for the project and select one.

- Enter the file name. This file is used by the dataflow.

- Setup the Dataset and Table to be use to write the messages from the pub/sub topic. Note that the table schema should match the pub/sub topic message structure.

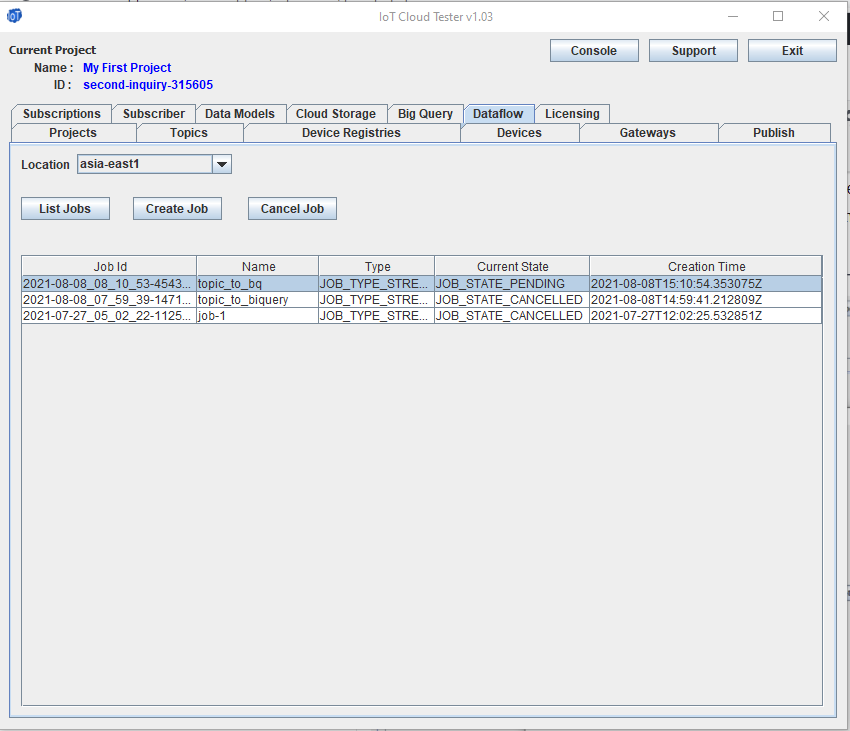

Dataflow Job 'topic_to_bq' is created immediately with pending status.

A post request is made to GCP to create the dataflow job. In this case, we're using the pre-build template PubSub_to_BigQuery to write pub/sub topic messages to BigQuery.

POST https://dataflow.googleapis.com/v1b3/projects/second-inquiry-315605/locations/asia-east1/templates:launch?gcsPath=gs://dataflow-templates/latest/PubSub_to_BigQuery HTTP/1.1

Server response for job creation.

{"jobName":"topic_to_bq","environment":{"tempLocation":"gs://my-iot-bucket-5/temp_file_for_dataflow","additionalExperiments":[],"bypassTempDirValidation":false,"ipConfiguration":"WORKER_IP_UNSPECIFIED"},"parameters":{"outputTableSpec":"second-inquiry-315605:device_data.environment","inputTopic":"projects/second-inquiry-315605/topics/environment"}}

{

"job": {

"id": "2021-08-08_08_10_53-4543477010421041882",

"projectId": "second-inquiry-315605",

"name": "topic_to_bq",

"type": "JOB_TYPE_STREAMING",

"currentStateTime": "1970-01-01T00:00:00Z",

"createTime": "2021-08-08T15:10:54.353075Z",

"location": "asia-east1",

"startTime": "2021-08-08T15:10:54.353075Z"

}

}

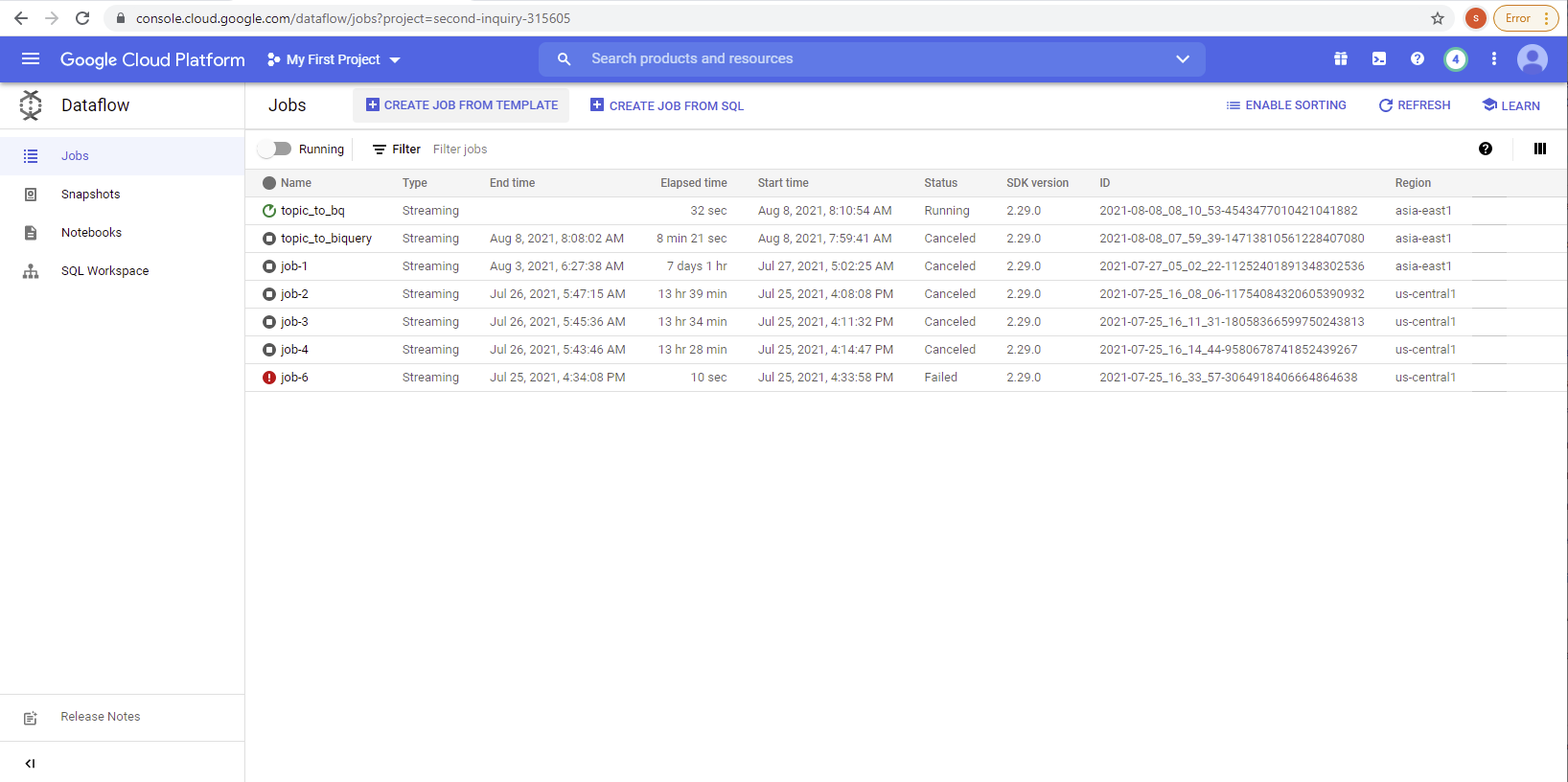

The newly created dataflow job can be viewed in the Google console.

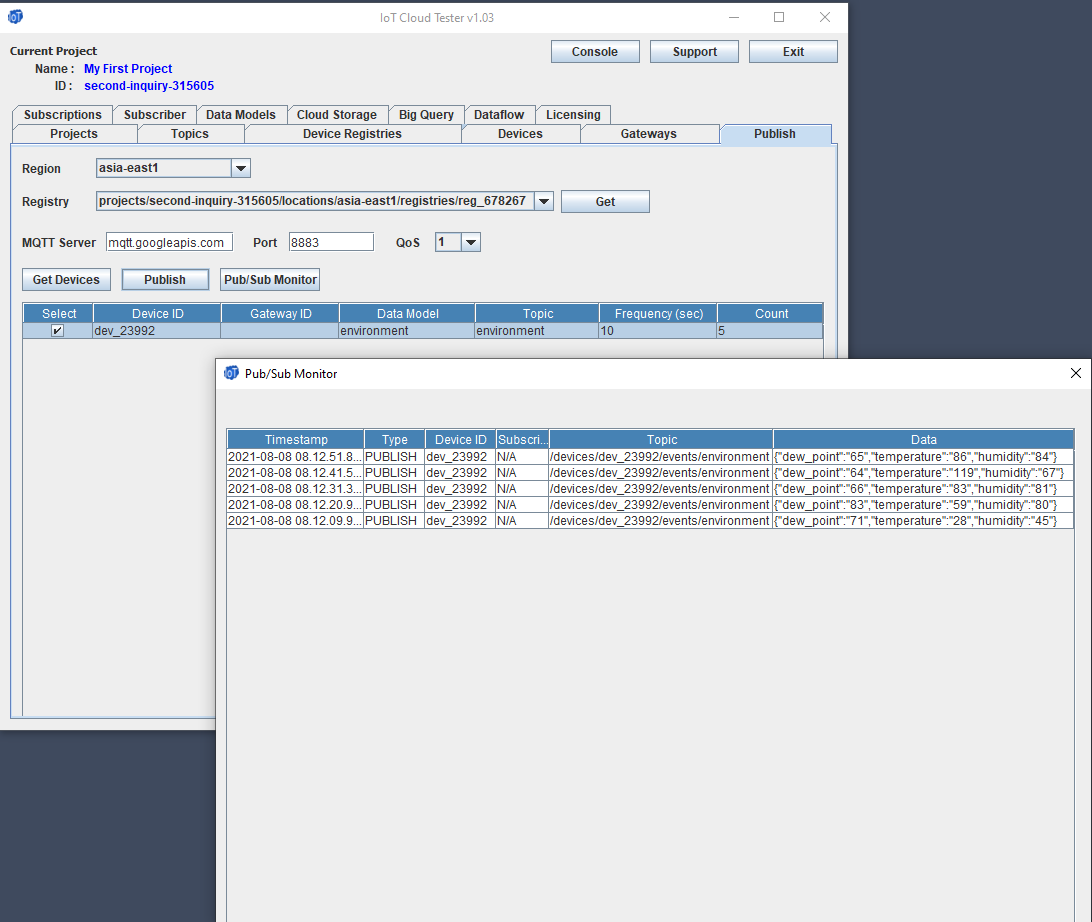

Now let us see the dataflow in action. We'll have a device publish message to that topic and verify that the data is written to the BigQuery table.

Below device 'dev_23992' is publishing to topic 'environment'. Above we have setup a dataflow job to write that topic messages to the 'environment' table in the device_data dataset in BigQuery.

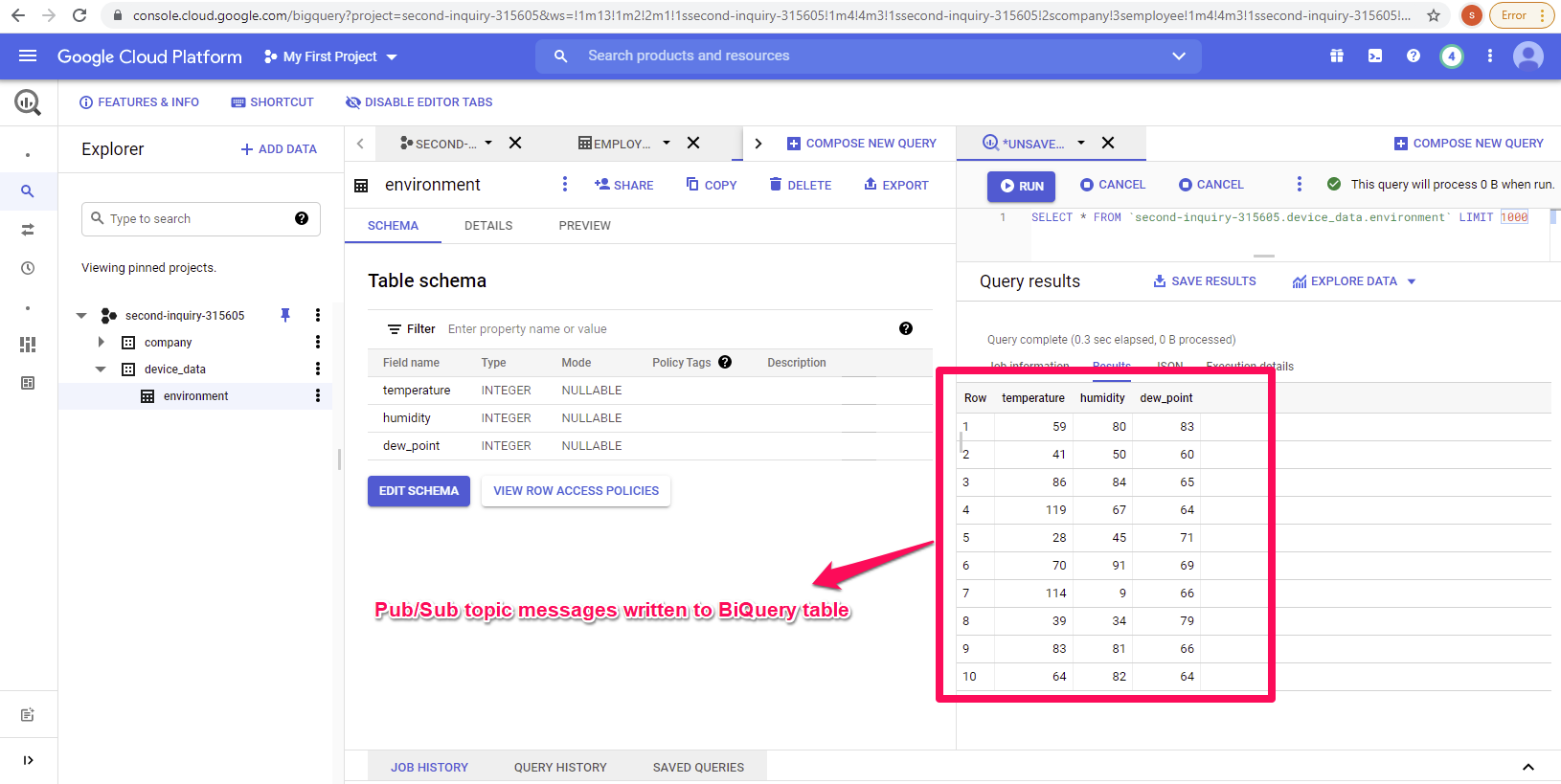

We can verify in BiqQuery that the streaming topic messages are written to the table.